Reduce Cloud Computing Expenses Without Sacrificing Performance

Introduction

Imagine needing a large storage solution for your important files and photos, but instead of purchasing a costly hard drive, you rent space in a highly secure and accessible online storage system. This is the essence of cloud computing—providing flexible and scalable access to your data from anywhere with an internet connection. However, just as renting an apartment can become expensive, utilizing cloud resources can also incur significant costs, especially when large amounts of storage and computational power are required. Reducing these costs without sacrificing performance is a key challenge that many businesses face today.

The Ubiquity of AI

Artificial Intelligence (AI) is reshaping industries worldwide, including healthcare, finance, manufacturing, and retail. As AI technology evolves, its integration into business processes has become essential for maintaining a competitive edge and fostering innovation. However, this rapid expansion comes with a notable challenge: escalating costs associated with cloud computing and storage. As cloud computing becomes more integral to business operations, finding ways to manage these costs effectively while maintaining high performance is crucial.

The Problem: Escalating Compute and Storage Costs

As businesses scale their AI operations, they encounter significant expenses related to computing and storage. According to data from Ripik.ai's extensive deployments, different AI models exhibit varying compute requirements:

Training and Inference Costs

Training AI models can be a major expense, accounting for 30% to 70% of total computational costs, with inference further adding to the cost. The need for substantial storage, particularly for image data, exacerbates the financial burden. As organizations strive to scale up their AI initiatives. However, reducing cloud computing costs becomes a primary objective.

Machine Learning Numerical Models

Machine learning numerical models are at the top of the list when it comes to computational power demands. These models perform complex calculations and data processing tasks, often involving large datasets and intricate algorithms. The computational requirements for these models can be up to five times greater than those for generative AI models. This high demand stems from the intensive processing needed to train and fine-tune the models to achieve high accuracy and reliability.

Generative AI Models

Generative AI models, such as those used for creating text, images, or even videos, require substantial computational resources, though they are less demanding than numerical models. These models involve processes like deep learning and neural networks, which consume considerable computing power, especially during training phases. While generative AI models are more efficient than numerical models, they still represent a significant portion of the overall computational expenses.

Vision AI Models

Vision AI models, which are designed to analyze and interpret visual data such as images and videos, fall between numerical and generative models in terms of compute requirements. These models need twice the computing power compared to generative AI models. The increased demand is due to the complexity of processing visual information, which often requires advanced techniques like convolutional neural networks (CNNs) and extensive data augmentation to improve model performance. Vision AI applications, such as object detection, image classification, and video analysis, necessitate powerful computational resources to achieve accurate and timely results.

The Solution: Ripik.ai's Cutting-Edge Research

Ripik.ai has dedicated two years to pioneering research focused on reducing cloud computing costs without sacrificing performance. By leveraging advanced model architectures and strategic partnerships, Ripik.ai offers innovative solutions for optimizing both compute and storage expenditures.

Advanced Model Architectures

Ripik.ai’s research into advanced model architectures aims to solve problems more efficiently, reducing computational demands. This involves optimizing algorithms and network structures to improve AI performance while lowering resource requirements.

Optimized Architectures

Through meticulous refinement of model structures, Ripik.ai achieves significant reductions in computational demands. By fine-tuning algorithms and adjusting network architectures, the company ensures that AI models operate efficiently without compromising on accuracy or reliability. This optimization process not only enhances performance metrics like latency and time-to-completion but also minimizes the overall compute resources required, leading to cost savings for organizations deploying these models.

Hybrid Architectures

Ripik.ai implements hybrid architectures that combine on-premise infrastructure with cloud resources. This approach involves using on-premise servers for approximately 80% of computational tasks, while offloading the remaining workload to the cloud. This setup offers:

- Cost Efficiency: Reduces reliance on expensive cloud services by leveraging on-premise resources.

- Flexibility: Allows dynamic allocation of workloads between on-premise and cloud environments, enhancing operational efficiency.

- Scalability:Facilitates seamless scaling of AI operations, accommodating both training and inference demands.

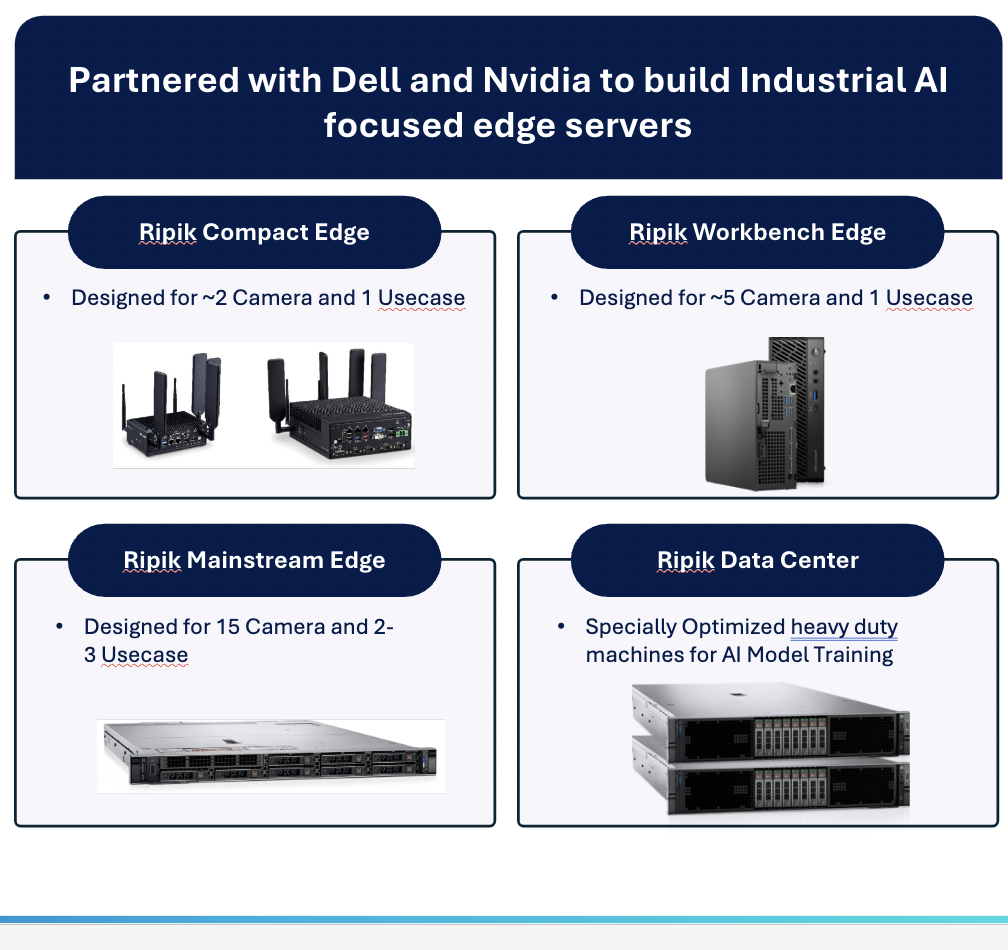

Detailed understanding of on-premise architecture

As Ripik, based on our understanding of the Vision AI use cases and infra required to need AI workloads, we have identified ideal server configs for Computer vision use cases out of the myriad of configs available. This enables savings of millions of dollars for large enterprises when they are setting up their on-premise infra.

Other Storage Costs Reduction Process

Effective management of storage is crucial for cost reduction. Ripik.ai employs several strategies to minimize storage expenses:

Dynamic Compression

Ripik.ai utilizes dynamic compression techniques to reduce the size of stored data. By compressing data dynamically, the platform minimizes storage requirements without compromising data integrity or accessibility.

Archival Cold Storage

To manage less frequently accessed data cost-effectively, Ripik.ai employs archival cold storage solutions. This approach involves moving data that is not accessed regularly to low-cost storage tiers designed for long-term retention.

Strategic Partnerships

Ripik.ai collaborates with industry leaders such as Nvidia, Dell, AWS, and Microsoft to enhance its AI solutions. These partnerships leverage Nvidia's GPU technology, Dell's hardware optimizations, AWS's scalable cloud services, and Microsoft's robust software solutions to deliver high-performance AI capabilities while controlling costs.

Partner with Ripik.ai

Ripik.ai is eager to collaborate with data science teams from Fortune 100 companies to deploy its platform and achieve significant reductions in infrastructure costs. By leveraging Ripik.ai's advanced research and strategic alliances, businesses can optimize their AI deployments without incurring prohibitive expenses.

For more information on how Ripik.ai can help your organization reduce cloud computing costs and enhance AI performance, contact us today. Together, we can drive innovation and efficiency in your AI initiatives.

Vision AI Platform for Industry

Our latest blogs

Insights and perspectives from Ripik.ai's thought leaders

Alternative fuels, such as Refuse-Derived Fuel (RDF), a type of solid waste, are increasingly being considered a viable solution. They serve as an alternative fuel option and an efficient method for disposing of municipal solid waste.

Discover how Vision AI, a cutting-edge technology, surpasses traditional ML models to optimize manufacturing processes, enhance quality control, and boost overall efficiency.